10 January 2024

26 May 2023

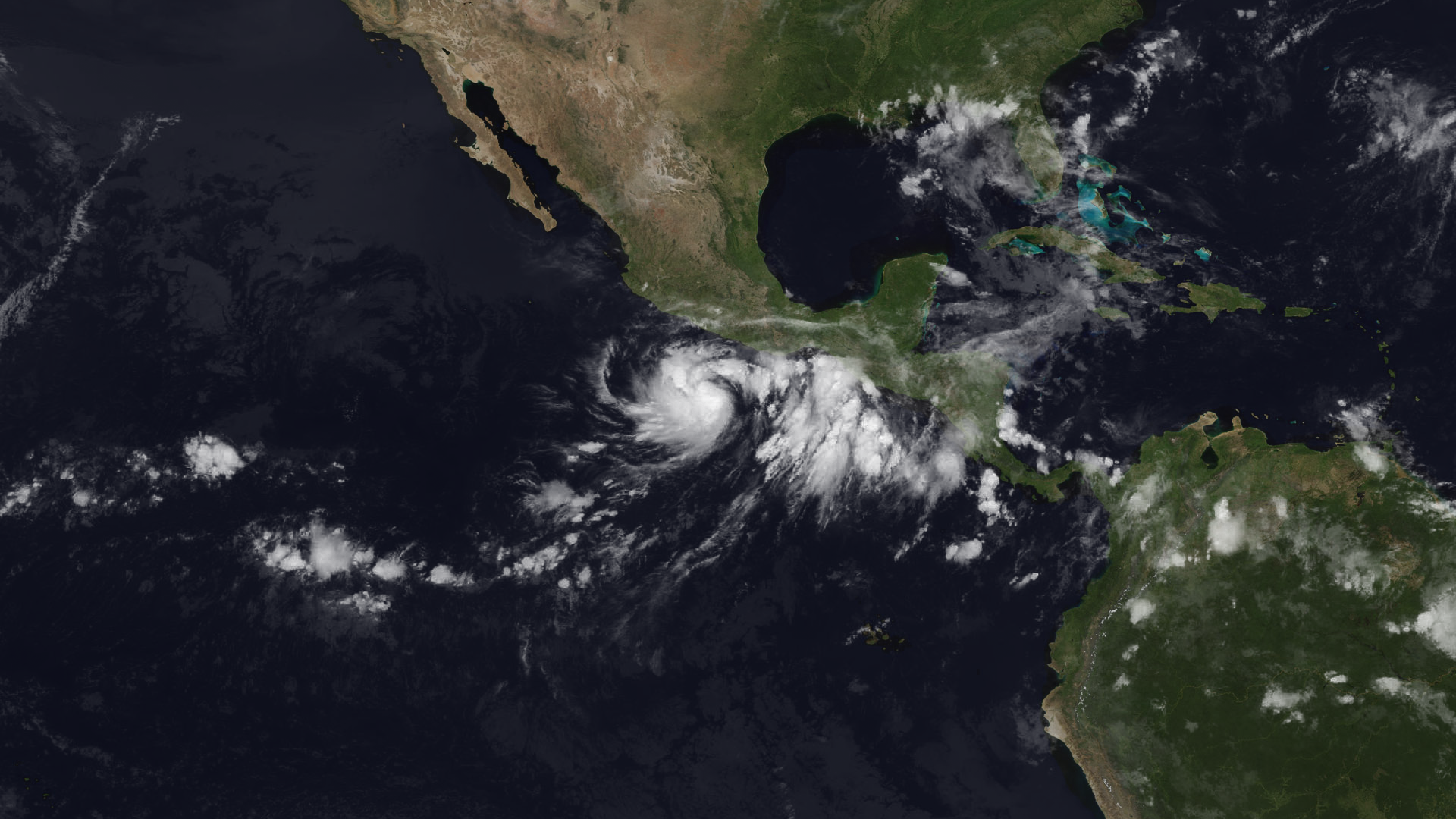

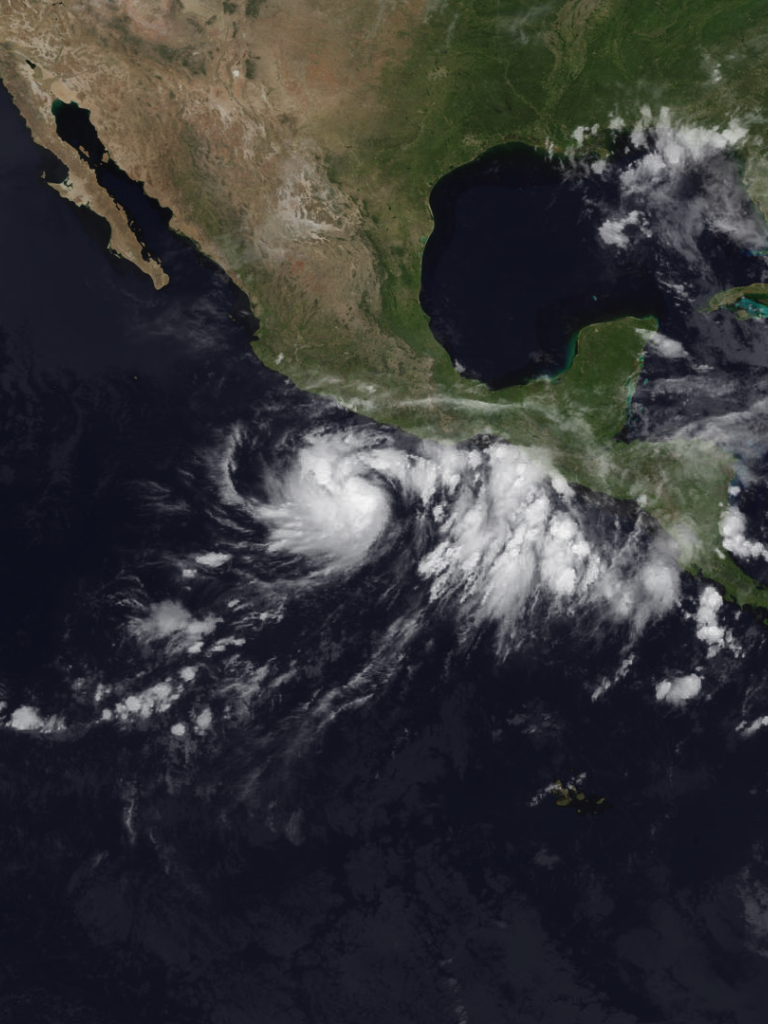

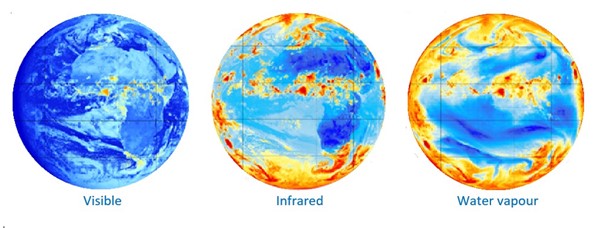

In the 1960s, attention turned to launching satellites capable of assisting weather forecasting on a regular basis. For a long time, the main application of data from weather satellites was near-real time monitoring of Earth’s weather.

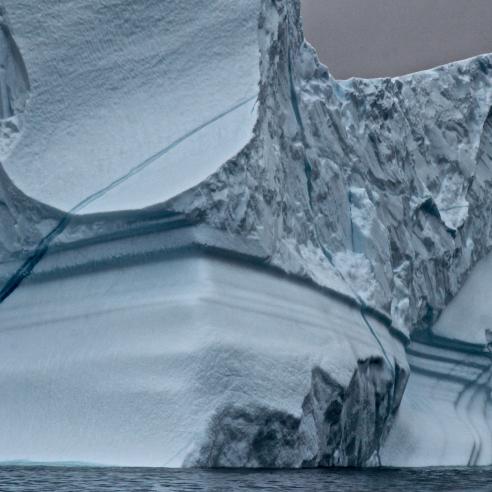

Today, however, it is recognised that early satellite observations, even if they are of inferior quality, help to better understand environmental changes since the 1960s.In fact, they have left a unique legacy as they observed and recorded the exact environmental situation on Earth at a given point in time. Exploiting this information in retrospect can enable us to pinpoint the precise situation of our planet’s climate, for example, to get a better picture of the extent of polar ice caps in the 1960s.

In addition, with modern techniques, such as climate reanalysis, it is possible to piece together information collected by the first satellites. In such a case, the early information observed by these satellites is crucially important to generate digital datasets that span several decades.

Why do we rescue data?

Data rescue is the process of preserving satellite data at risk of being lost. This is most often due to the loss or deterioration of the storage media or to the loss of experience or information on how to read this data.

Data rescue is critically important for climate and reanalysis activities to ensure that scientists and users maintain access to satellite data all the way back to the beginning of operations.

The priority of data rescue is preserving these original data so that they can remain usable by future generations.

Besides rescuing the data, it is necessary to correct for geometric and radiometric effects and to reformat the data to today’s digital standards. This is to allow for usage by standardised algorithms and software.

For data users, this represents a major advancement compared to the bespoke efforts that were required originally by investigators for analysing the data from each particular satellite instrument.

Yet, exploiting the sheer volume of satellite data collected over several decades is another feat for Earth and data scientists to achieve.

What satellite data do we rescue?

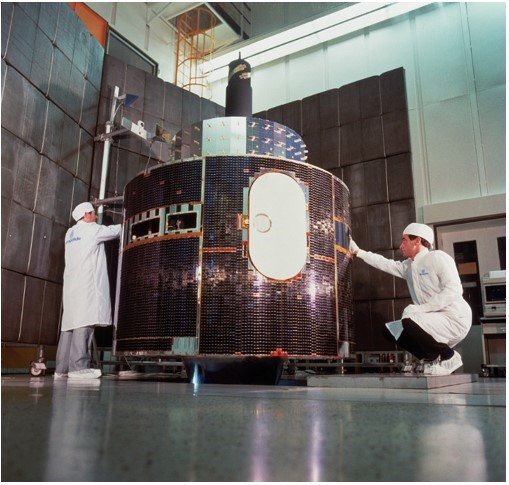

EUMETSAT’s first efforts to rescue satellite data for climate research started in the framework of two European Union Funded FP7 projects (ERA-CLIM and ERA-CLIM-2) that, among others, aimed at rescuing and reprocessing historic satellite data, such as data from the Meteosat Visible and Infrared Imager (MVIRI) on Meteosat First Generation satellites. EUMETSAT gives special emphasis to data from the first Meteosat satellite, which was not part of EUMETSAT’s archive until a copy of most of the data was found in the United States in 2015.

The work on rescuing data from heritage instruments, such as MFG and AVHRR, continued in association with the European Union Funded H2020 Fidelity and uncertainty in climate data records from Earth Observations (FIDUCEO) project, the Copernicus Climate Change Service (C3S), and the European Space Agency (ESA) Long-Term Data Preservation Programme, which are examples of fruitful collaborations with international partners.

Why do we recalibrate satellite data?

Obtaining information about long-term climate variability and change most often requires combining time series of observations made by different satellite sensors.

Most satellite sensors are calibrated pre-launch, where calibration means establishing the basic model for translating a measured signal (e.g. in counts) into the required observation (e.g. radiance). Although such models often allow for in-orbit corrections, for example by making gain changes based on on-board measurements, there are many potential problems with using these pre-launch established models. This is because long-standing historic sensors often behave differently in-orbit than pre-launch and also because some effects only manifest while the satellite is orbiting the Earth, such as the effect of warming or cooling of the sensor on the measurements.

Through analysing the observation time series as a whole, it is possible to develop an in-depth understanding of these effects and develop models to recalibrate the observations post-launch. Among others, post-launch recalibration has the advantage that the observations concerned can be compared against other, sometimes superior, satellite measurements.

How do we recalibrate satellite data?

Recalibration is defined as the process of adjusting calibration coefficients and/or obtaining a new calibration model to update or replace the operational calibration used for the original measurements (see the FIDUCEO Project).

The main principle of many recalibration methods is to compare observations of a particular satellite sensor to reference observations of another satellite sensor or of a ground-based target that are independent and superior in quality.

In the absence of superior reference observations, simulated sensor observations based on climate reanalysis data - such as the European Centre for Medium-Range Weather Forecasts (ECMWF) reanalysis - may also serve as a reference for a particular satellite sensor.

Find out more in our User Portal.

Why do we estimate the uncertainty of satellite data?

The provision of measurements with rigorous uncertainty estimates are increasingly important for climate monitoring and climate reanalysis.

This is true for Fundamental Climate Data Records (FCDRs) of long-term calibrated and quality-controlled sensor data. It is also true for Climate Data Records (CDRs) of long-term quality controlled geophysical parameters, such as the Essential Climate Variables defined by the WMO Global Climate Observing System (GCOS 138, 2010). Among others, the European Centre for Medium-Range Weather Forecasts (ECMWF) needs uncertainty estimates as part of the assimilation procedures of their climate reanalysis.

The European Union funded project, Fidelity and Uncertainty in Climate data records from Earth Observations (FIDUCEO), was the first project that developed metrologically defensible approaches for the production of climate data records from Earth Observations with traceable uncertainty estimates (https://cordis.europa.eu/project/id/638822). The developed metrological approaches were used to produce several state-of-art FCDRs and CDRs, e.g. EUMETSAT’s Meteosat FCDR, which were assessed on their quality in terms of stability and harmonisation to demonstrate proof of principle.

References

GCOS-138, 2010; Implementation plan for the Global Observing System for Climate in support of the UNFCCC https://library.wmo.int/doc_num.php?explnum_id=3851